Artificial Intelligence - understanding

Understanding Neural Networks: A Beginner's Guide

Quest Lab Team

Quest Lab Team

"Neural networks represent one of the most significant technological advances of our time, mimicking the human brain's structure to solve problems that traditional computing approaches couldn't tackle."

The Fundamental Building Blocks of Neural Networks

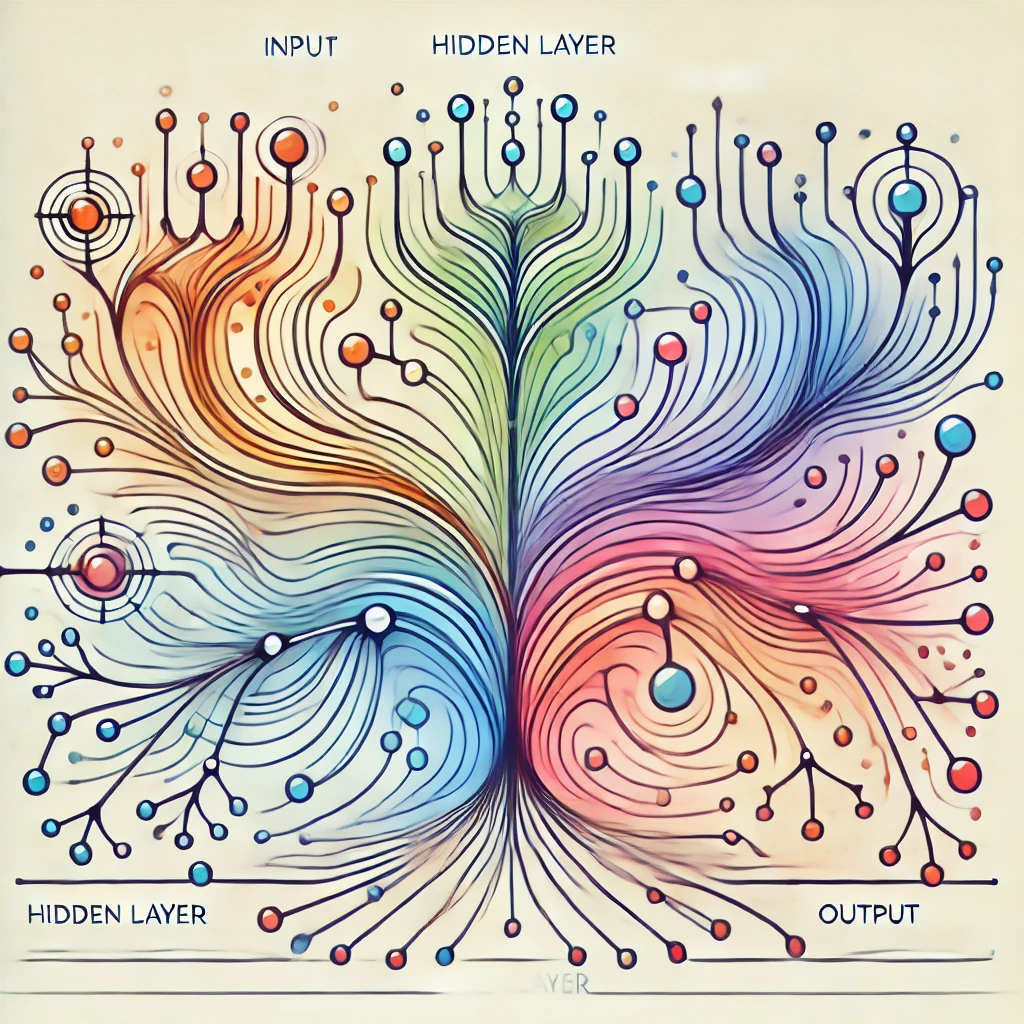

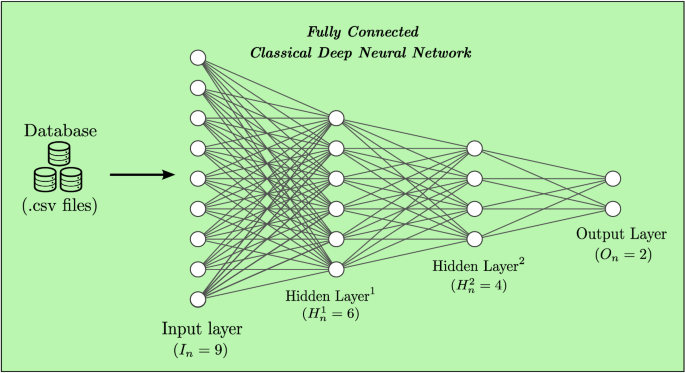

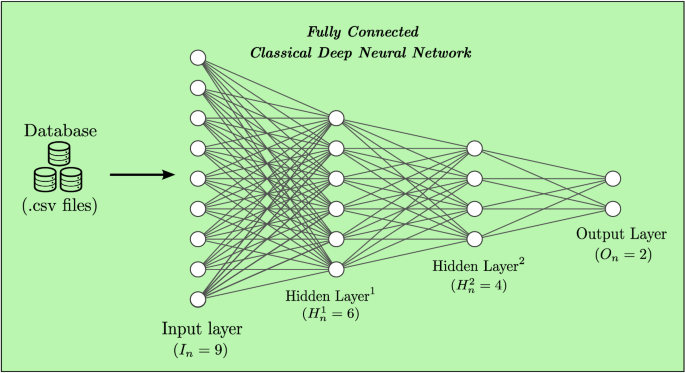

At their core, neural networks are sophisticated mathematical models inspired by the biological neural networks that constitute animal brains. Just as our brains consist of interconnected neurons that process and transmit information, artificial neural networks comprise layers of nodes (artificial neurons) that process and pass data through weighted connections.

Key Components of Neural Networks

Understanding these fundamental elements is crucial for grasping how neural networks function:

- Input Layer: The gateway for data entering the network

- Hidden Layers: Where the complex processing occurs

- Output Layer: Produces the final result or prediction

- Neurons (Nodes): The basic processing units

- Weights and Biases: Parameters that are adjusted during training

The Architecture of Neural Networks

Neural networks come in various architectures, each designed to excel at specific types of tasks. The simplest form is the feedforward neural network, where information flows in one direction, from input to output. However, the field has evolved to include more sophisticated architectures that can handle increasingly complex problems.

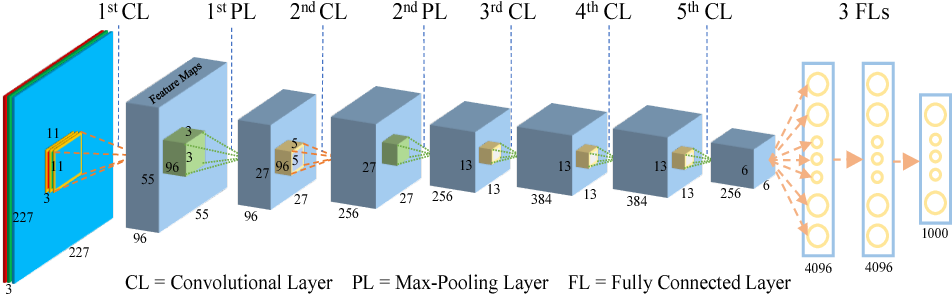

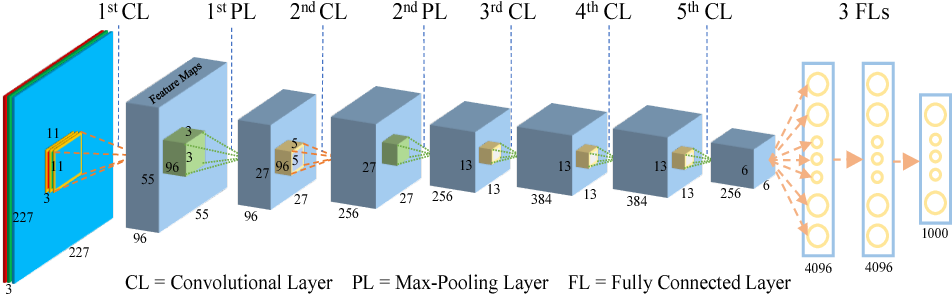

- Convolutional Neural Networks (CNNs): Specialized for processing grid-like data, such as images. They use convolutional layers to detect features and patterns hierarchically.

- Recurrent Neural Networks (RNNs): Designed to work with sequential data by maintaining an internal memory of previous inputs.

- Transformers: A revolutionary architecture that has become the backbone of modern natural language processing.

- Generative Adversarial Networks (GANs): Consist of two networks working against each other to generate new, synthetic data.

The Learning Process: How Neural Networks Train

The magic of neural networks lies in their ability to learn from examples. This learning process, known as training, involves exposing the network to large amounts of data and adjusting its internal parameters (weights and biases) to minimize the difference between its predictions and the actual desired outputs.

The Training Process

Neural network training involves several key steps and concepts:

- Forward Propagation: Data flows through the network to generate predictions

- Loss Calculation: Measuring the error in predictions

- Backpropagation: Computing gradients to understand how to adjust weights

- Optimization: Updating weights to improve performance

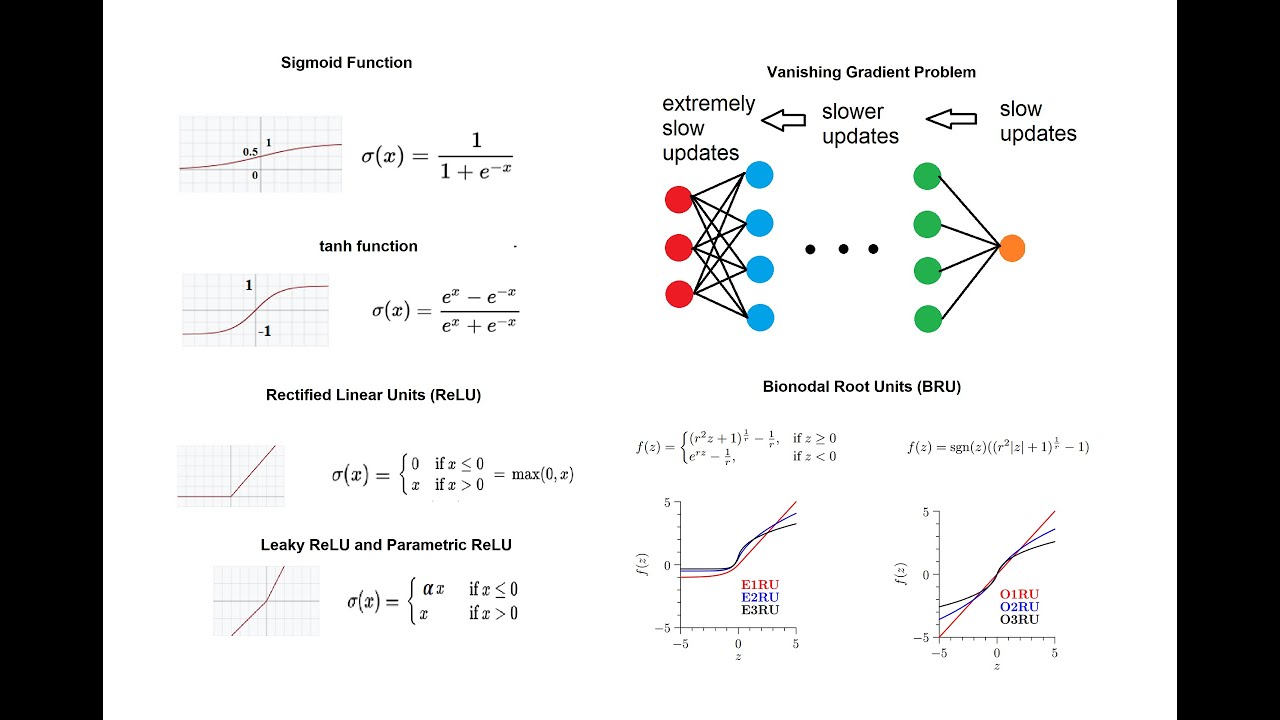

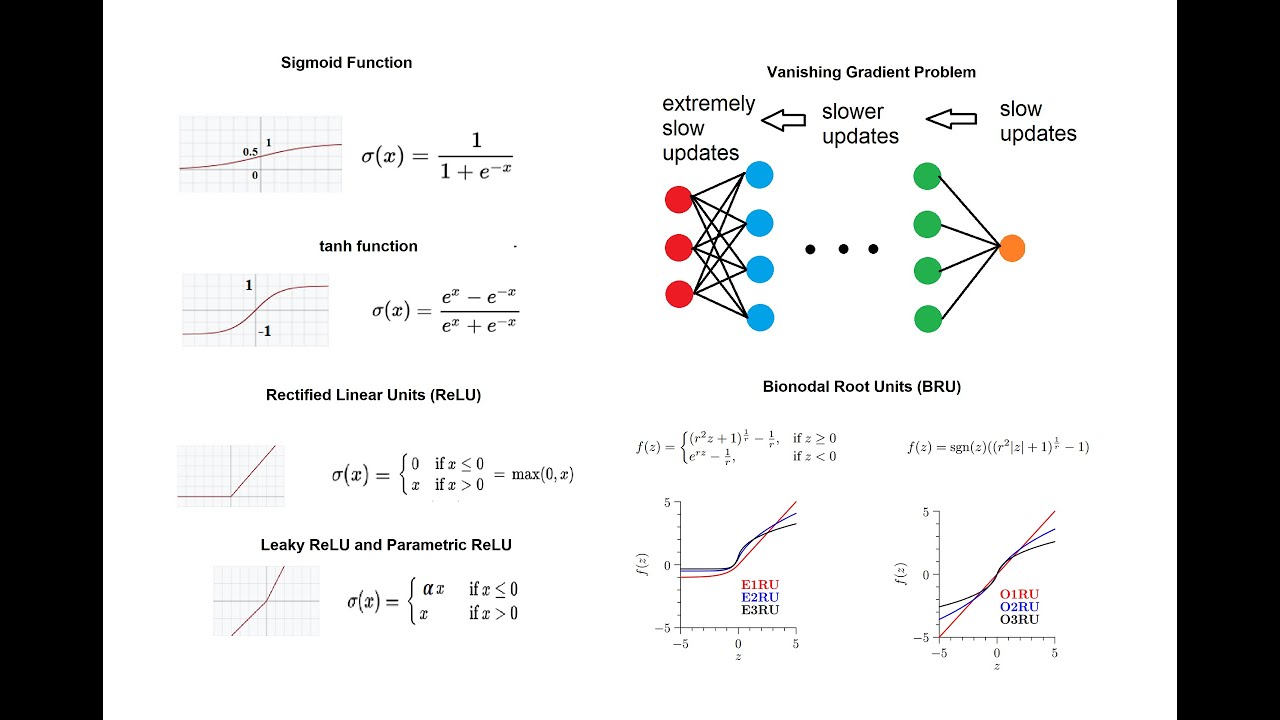

Activation Functions: Adding Non-linearity

Activation functions are crucial components that introduce non-linearity into neural networks, allowing them to learn complex patterns. Without activation functions, neural networks would be limited to learning linear relationships, significantly restricting their capabilities.

- ReLU (Rectified Linear Unit): The most commonly used activation function, known for its computational efficiency and effectiveness in preventing the vanishing gradient problem.

- Sigmoid: Maps outputs to a range between 0 and 1, useful for binary classification problems.

- Tanh: Similar to sigmoid but maps to a range between -1 and 1, often used in the hidden layers.

- Softmax: Used in the output layer for multi-class classification problems.

Practical Applications of Neural Networks

The applications of neural networks span virtually every industry and field of study. Their ability to recognize patterns and learn from examples has made them invaluable tools in solving complex real-world problems.

Key Application Areas

Neural networks are transforming numerous fields through their powerful capabilities:

- Computer Vision: Image recognition, object detection, and scene understanding

- Natural Language Processing: Translation, text generation, and sentiment analysis

- Healthcare: Disease diagnosis, drug discovery, and medical image analysis

- Finance: Risk assessment, fraud detection, and market prediction

- Autonomous Systems: Self-driving cars, robotics, and drone navigation

The Journey of Neural Networks: From Biology to Silicon

In the quiet halls of research laboratories during the 1940s, scientists first began to dream of machines that could think like humans. Inspired by the intricate dance of neurons in our brains, they embarked on a journey that would span decades, facing numerous winters and springs of artificial intelligence. Today, as we witness neural networks powering everything from our smartphone cameras to groundbreaking medical discoveries, it's worth reflecting on this remarkable journey.

"Every breakthrough in neural networks carries within it the dreams of countless researchers who refused to give up when others said it couldn't be done."

The Hidden Stories Behind Neural Network Breakthroughs

Behind every major advancement in neural networks lies a story of persistence and innovation. Consider the case of convolutional neural networks (CNNs), now ubiquitous in computer vision. Inspired by studies of cats' visual cortices in the 1960s, researchers spent decades refining these networks. The breakthrough came not just from mathematical insights, but from understanding how our own brains process visual information, layer by layer, feature by feature.

Tales from the Training Room

Every AI researcher has their war stories about training neural networks. Like gardeners tending to temperamental plants, they speak of the delicate balance required: too high a learning rate, and your network explodes; too low, and it refuses to learn. They tell of networks trained for weeks, only to discover a simple bug in the data preprocessing. These stories, shared at conferences and in lab meetings, form the unofficial curriculum of deep learning.

Memorable Moments in Training

Common experiences that every neural network researcher knows too well:

- The midnight eureka moment when a model finally converges

- The mysterious case of the vanishing gradients

- The unexpected patterns discovered in visualizations

- The triumph of finally beating the baseline

A Day in the Life of a Neural Network

Imagine following a single input as it journeys through a modern neural network. Starting as a simple signal—perhaps a pixel value or a word—it travels through layers of artificial neurons, each applying their own transformations. In attention layers, it briefly mingles with other inputs, forming complex relationships. Through skip connections, it takes shortcuts, preserving its original essence while picking up new meaning. Finally, it emerges transformed, contributing to a prediction, a generation, or a decision.

The Unexpected Applications

Neural networks have found homes in the most unexpected places. Marine biologists use them to track whale songs across oceans. Astronomers employ them to search for new planets in distant solar systems. A small family vineyard in France uses them to predict the perfect harvest time. Each application tells a story of human ingenuity meeting artificial intelligence, creating solutions that neither could achieve alone.

- Art Conservation: Neural networks helping restore ancient paintings by analyzing brush strokes and pigments.

- Wildlife Protection: Networks processing camera trap images to track endangered species in real-time.

- Archaeological Discovery: AI systems finding hidden structures in satellite imagery of ancient sites.

- Music Composition: Networks collaborating with composers to explore new melodic possibilities.

The Human Element in Neural Networks

While we often focus on the technical aspects of neural networks, the human stories behind their development and deployment are equally fascinating. Consider the team of medical researchers who spent years gathering data and training a network to detect early signs of diabetes in retinal scans, potentially saving millions from vision loss. Or the environmental scientists using neural networks to predict wildlife movements, helping prevent human-animal conflicts in developing regions.

Impact Stories

Real-world examples of neural networks making a difference:

- Rural farmers using smartphone-based crop disease detection

- Artists discovering new forms of expression through AI collaboration

- Students receiving personalized education through adaptive learning systems

- Communities protecting their local environments with AI-powered monitoring

The Poetry of Patterns

Deep within neural networks, patterns emerge that mirror the fundamental structures of our universe. The hierarchical feature detection in CNNs reflects the way nature builds complexity from simplicity. The attention mechanisms in transformers echo the way humans focus on what's important while maintaining awareness of the whole. These parallels remind us that in creating artificial intelligence, we're not just engineering solutions—we're uncovering universal principles of information processing.

Lessons from Failed Experiments

Not every neural network succeeds. But even in failure, these systems teach us valuable lessons. A network trained to recognize birds that focused instead on background vegetation revealed important biases in our dataset. Another, designed to generate realistic faces but producing surreal abstractions, sparked discussions about the nature of human perception and creativity. These 'failures' often lead to deeper insights and new research directions.

"Sometimes the most interesting discoveries come from neural networks that don't behave exactly as we expected."

The Future Whispers

As we stand at the current frontier of neural network research, we can hear whispers of the future. Quantum neural networks promise to harness the strange behavior of subatomic particles for unprecedented computational power. Neuromorphic chips, mimicking the brain's architecture more closely than ever, suggest new ways of efficient, low-power AI. And somewhere, perhaps in a small lab or a bedroom office, someone is having an idea that will revolutionize the field once again.

Emerging Possibilities

Areas where neural networks might take us next:

- Brain-computer interfaces enhanced by neural decoders

- Sustainable AI systems powered by biological computing

- Emotional intelligence enhanced by multimodal understanding

- Collaborative networks that extend human creativity

The Symphony of Signals

In the end, neural networks are like vast orchestras, each neuron a musician playing its part in a grand symphony of computation. The data flows like music, transformed and combined in countless ways, eventually emerging as something meaningful and often beautiful. As we continue to explore and expand their capabilities, we're not just advancing technology—we're learning more about ourselves and the fascinating ways intelligence can manifest in our universe.

Challenges and Limitations

While neural networks have achieved remarkable success, they are not without their challenges and limitations. Understanding these constraints is crucial for anyone working with or implementing neural network solutions.

- Data Requirements: Neural networks typically need large amounts of high-quality training data to perform well.

- Computational Resources: Training complex neural networks requires significant computational power and time.

- Black Box Nature: The decision-making process of neural networks can be difficult to interpret and explain.

- Overfitting: Networks can become too specialized to their training data, performing poorly on new, unseen data.

Best Practices for Implementation

Successfully implementing neural networks requires careful consideration of various factors and adherence to established best practices. These guidelines can help ensure better performance and more reliable results.

Implementation Guidelines

Follow these best practices for better results:

- Start Simple: Begin with basic architectures and gradually increase complexity

- Data Preprocessing: Ensure data is clean, normalized, and properly formatted

- Validation Strategy: Use proper validation techniques to assess model performance

- Regular Testing: Continuously monitor and evaluate model performance

- Documentation: Maintain detailed records of model architecture and training parameters

Future Directions and Emerging Trends

The field of neural networks continues to evolve at a rapid pace, with new architectures, training methods, and applications emerging regularly. Understanding current trends and future directions is crucial for staying ahead in this dynamic field.

- Few-Shot Learning: Developing models that can learn from minimal examples.

- Energy Efficiency: Creating more sustainable and environmentally friendly neural network architectures.

- Interpretability: Advancing methods to understand and explain neural network decisions.

- Hybrid Systems: Combining neural networks with other AI approaches for better performance.

Getting Started with Neural Networks

For those interested in working with neural networks, there are numerous resources and frameworks available to help you get started. Popular frameworks like TensorFlow and PyTorch provide high-level APIs that make it easier to build and train neural networks.

Learning Resources

Essential resources for learning neural networks:

- Online Courses: Coursera, edX, and Fast.ai offer comprehensive courses

- Framework Documentation: Official guides for TensorFlow and PyTorch

- Research Papers: Keep up with latest developments on arXiv

- Community Forums: Engage with communities on Stack Overflow and GitHub

- Practice Projects: Start with simple projects and gradually increase complexity

Advanced Neural Network Architectures

Beyond the fundamental architectures, the field has evolved to include sophisticated variants that push the boundaries of what's possible with artificial neural networks. These advanced architectures address specific challenges and open new possibilities for AI applications.

Cutting-Edge Architectures

Recent innovations in neural network design have led to several breakthrough architectures:

- Capsule Networks: Addressing spatial hierarchies in vision tasks

- Graph Neural Networks: Processing graph-structured data

- Neural ODEs: Continuous-depth models for time-series analysis

- Mixture of Experts: Specialized sub-networks for different tasks

Attention Mechanisms and Transformers: A Deeper Dive

The attention mechanism has revolutionized how neural networks process sequential data. Initially introduced to improve machine translation, attention has become a fundamental building block in modern AI systems. The self-attention mechanism, in particular, allows models to weigh the importance of different parts of the input dynamically.

- Multi-Head Attention: Enables parallel attention computations across different representation subspaces.

- Scaled Dot-Product Attention: Provides efficient attention computation while maintaining stability during training.

- Cross-Attention: Allows models to attend to different sequences, crucial for tasks like translation.

- Sparse Attention: Reduces computational complexity by attending to select portions of the input.

Neural Architecture Search (NAS)

Neural Architecture Search represents a significant advancement in automating the design of neural networks. Instead of relying on human intuition and manual design, NAS employs machine learning techniques to discover optimal network architectures for specific tasks.

NAS Approaches

Current methods in Neural Architecture Search include:

- Reinforcement Learning-based search strategies

- Evolutionary algorithms for architecture optimization

- Gradient-based architecture search

- One-shot architecture search with weight sharing

Quantum Neural Networks

As quantum computing continues to advance, researchers are exploring the intersection of quantum computing and neural networks. Quantum Neural Networks (QNNs) leverage quantum mechanical principles to potentially achieve computational advantages over classical neural networks.

- Quantum Perceptrons: Neural units that operate on quantum bits instead of classical bits.

- Quantum Backpropagation: Training algorithms adapted for quantum systems.

- Hybrid Quantum-Classical Networks: Combining quantum and classical computing for optimal performance.

- Quantum Feature Maps: Encoding classical data into quantum states for processing.

Neuromorphic Computing

Neuromorphic computing represents a paradigm shift in how we implement neural networks in hardware. By designing computer chips that mimic the structure and function of biological brains, neuromorphic systems aim to achieve unprecedented efficiency in neural network computations.

Neuromorphic Innovations

Key developments in neuromorphic computing include:

- Spiking Neural Networks (SNNs) for energy-efficient processing

- Memristive devices for synaptic weight storage

- Event-driven computation models

- Bio-inspired learning algorithms

Meta-Learning and Few-Shot Learning

Meta-learning, often described as 'learning to learn,' represents a significant advancement in making neural networks more adaptable and efficient. This approach enables models to learn from limited examples and adapt quickly to new tasks, addressing one of the major limitations of traditional deep learning.

- Model-Agnostic Meta-Learning (MAML): A framework for learning initialization parameters that facilitate quick adaptation.

- Prototypical Networks: Learning prototypical representations for few-shot classification.

- Memory-Augmented Neural Networks: Incorporating external memory for improved meta-learning capabilities.

- Online Meta-Learning: Continuously adapting meta-learning parameters in dynamic environments.

Ethical Considerations in Neural Network Development

As neural networks become more powerful and widespread, the ethical implications of their deployment become increasingly important. Developers and researchers must consider various ethical aspects throughout the development lifecycle.

Ethical Frameworks

Critical ethical considerations in neural network development:

- Bias detection and mitigation strategies

- Fairness metrics and evaluation criteria

- Privacy-preserving learning techniques

- Environmental impact assessment

- Transparency and explainability requirements

Neural Networks in Edge Computing

The deployment of neural networks on edge devices presents unique challenges and opportunities. Edge AI enables real-time processing and reduced latency while maintaining privacy and reducing bandwidth requirements.

- Model Compression: Techniques for reducing model size while maintaining performance.

- Quantization: Reducing numerical precision for efficient computation.

- Knowledge Distillation: Training smaller networks to mimic larger ones.

- Hardware-Aware Neural Architecture: Designing networks optimized for specific hardware constraints.

Self-Supervised Learning in Neural Networks

Self-supervised learning has emerged as a powerful paradigm for training neural networks with limited labeled data. This approach leverages the inherent structure in data to create supervision signals, enabling models to learn rich representations without explicit labels.

Self-Supervised Techniques

Advanced approaches in self-supervised learning:

- Contrastive learning frameworks

- Masked autoencoding strategies

- Predictive coding methods

- Multi-view learning approaches

Neural Networks in Scientific Discovery

Neural networks are increasingly being used as tools for scientific discovery, from particle physics to drug discovery. These applications require specialized architectures and training approaches tailored to scientific domains.

- Physics-Informed Neural Networks: Incorporating physical constraints and laws into network architecture.

- Chemical Property Prediction: Models for molecular property prediction and drug design.

- Astronomical Data Analysis: Networks for processing and analyzing telescope data.

- Climate Modeling: Neural networks for weather prediction and climate change analysis.

Continuous Learning Systems

Continuous learning systems represent the next frontier in neural network development, enabling models to adapt and improve over time while maintaining previously acquired knowledge. This approach addresses the challenge of catastrophic forgetting and enables more dynamic AI systems.

Continuous Learning Strategies

Key approaches to implementing continuous learning:

- Elastic Weight Consolidation for preserving critical parameters

- Dynamic architecture expansion for new tasks

- Memory replay mechanisms for knowledge retention

- Progressive neural networks with lateral connections

"The future of neural networks lies not just in their technical capabilities, but in their potential to transform how we approach problem-solving across all domains of human knowledge and endeavor."

Quest Lab Writer Team

This article was made live by Quest Lab Team of writers and expertise in field of searching and exploring

rich technological content on AI and its future with its impact on the modern world